Instructional Empowerment founded an independent Applied Research Center to verify that every service we provide to our partner schools and districts is evidence-based, impactful, and replicable. The Applied Research Center systematically uses high-quality research standards and state-of-the-art methods and tools to develop practical solutions for real-world social problems faced by educators.

Applied Research Center

We Set High Research Standards Because We Care About Your Success

Federally Certified Researcher Validates Our Work

Dr. Lindsey Devers Basileo, a What Works Clearinghouse (WWC) Certified Reviewer, leads our Applied Research Center. She has over 15 years of experience in social science research. As our Director of Research, Dr. Basileo has an ethical obligation to collect and analyze data in an unbiased way. She conducts outcome evaluations of our treatment programs, using rigorous research methods that adhere to WWC standards. Additionally, she is consistently ensuring our instruments and metrics are validated against the measures that are most important to school districts. We always seek outside parties to peer review and confirm our results. This two-pronged approach to applied research—both in-house research plus peer review—ensures we will be successful both to our customers and our mission.

What is the What Works Clearinghouse and why does this matter to you?

The WWC is part of the U.S. Department of Education’s Institute of Education Sciences and reviews evidence of effectiveness of programs, policies, or practices by using a consistent and transparent set of standards. The WWC supports educators in finding high-quality research and interventions according to the evidence requirements under the Every Student Succeeds Act (ESSA). These evidence requirements ensure that state departments of education, school districts, and schools can identify practices that meet the needs of various student populations.

We Meet Evidence-Based ESSA Levels

Everything we do is both research-based and evidence-based. We start with a strong theoretical research basis, but we don’t stop there. We rigorously test our methods to prove they achieve replicable results in various situations, across various populations of students.

Every activity, strategy, and intervention we use has attained or is in the process of attaining criteria for evidence-based practices under the federal Every Student Succeeds Act (ESSA).

“Evidence requirements under the Every Student Succeeds Act (ESSA) are designed to ensure that states, districts, and schools can identify programs, practices, products, and policies that work across various populations.”

https://ies.ed.gov/ncee/wwc/essa

Dr. Lindsey Devers Basileo, Director of Research

Ph.D. in social science, 2010 Florida State University

Nationally Certified What Works Clearinghouse Reviewer: Group Design Standards (Version 4.0 & 4.1)

Research Specialties:

- Process & Outcome Evaluations (15+ yrs.)

- Experimental & Quasi-experimental Designs (15+ yrs.)

- Qualitative Methods (15+ yrs.)

- Questionnaire Design, Probability Sampling (15+ yrs.)

- Propensity Score Matching (10+ yrs.)

- Hierarchical Linear Modeling (10+ yrs.)

Dr. Merewyn E. (“Libba”) Lyons, Senior Research Analyst

Ed.D. in Educational Leadership, 2009 Nova Southern University

Member of the Center for Self-Determination Theory, The American Educational Research Association, and the European Association for Research on Learning and Instruction.

Research Specialties:

- Educational Psychology with a focus on understanding the effect of motivation on teaching, learning, and educational leadership

Researcher of Deeper Learning, Motivation, and Leadership

Dr. Merewyn E. (“Libba”) Lyons is a Senior Research Analyst with the Applied Research Center of Instructional Empowerment. Her primary research interest is educational psychology, with a focus on deeper learning and understanding the effect of motivation on teaching, learning, and educational leadership. She is a member of the Center for Self-Determination Theory and of the American Educational Research Association.

As a retired officer of the United States Navy and a retired K-12 schoolteacher and administrator, Dr. Lyons is a decorated veteran who held high-level leadership positions on major Navy staff and as a commanding officer.

In her second career as an educator, she served as a classroom teacher, school administrator, and district executive director of large federally funded grant projects. Dr. Lyons brings over 40 years of deep experience in leadership, management, and education to the Applied Research Center.

Objective, Independent Research with Rigorous Testing

To ensure objective, stringent research protocols, the Applied Research Center operates autonomously from the rest of the company. The Applied Research Center team has independence so it can hold the rest of our organization accountable to the highest research standards in every aspect of our work, from collecting data from our field faculty to strenuously testing our hypotheses.

We rigorously test all our school advancement methods to ensure they maximize positive effects on student outcomes. We are so dedicated to our research methodologies that our field staff are even taught “how to think like a researcher.” We invest a significant level of resources to fund the Applied Research Center because it continuously improves the services we provide to our partners.

Our Definition of Deeper Learning

Instructional Empowerment defines deeper learning as ALL students developing into leaders of their own learning. They collaborate in teams, engage in rich discourse, and tackle rigorous tasks that prepare them for both academic and real-world success.

We Take Your Trust Seriously

Trust is earned. We go to the lengths of verifying our results through the Applied Research Center because this work matters to us.

The Applied Research Center strengthens ethical norms in the scientific and educational community. We transparently share data with our partners regardless of the findings because trust comes from transparency. We do not conceal results, and we aim to learn from them. This transparency is ultimately what helps move our partner schools forward and facilitates constructive conversations about areas for improvement. These conversations often clear the path to move a school forward.

Instructional Empowerment cares deeply about our mission of ending generational poverty and eliminating achievement gaps through redesigned rigorous Tier 1 instruction through redesigned rigorous Tier 1 instruction that ensures deeper learning for ALL students, and it is imperative to us that our methodologies are effective. Our team members have such a strong and consistent track record in school advancement because we align ourselves to research and hold ourselves to the highest standards set by our independent Applied Research Center.

We have an obligation to strengthen the ethical norms in the educational community because knowing the objective results is the only way in which we can move all schools forward.

Rigorous Research Methodology

The Applied Research Center thrives on creating robust research designs in circumstances where it can be the most difficult, in school and district settings.

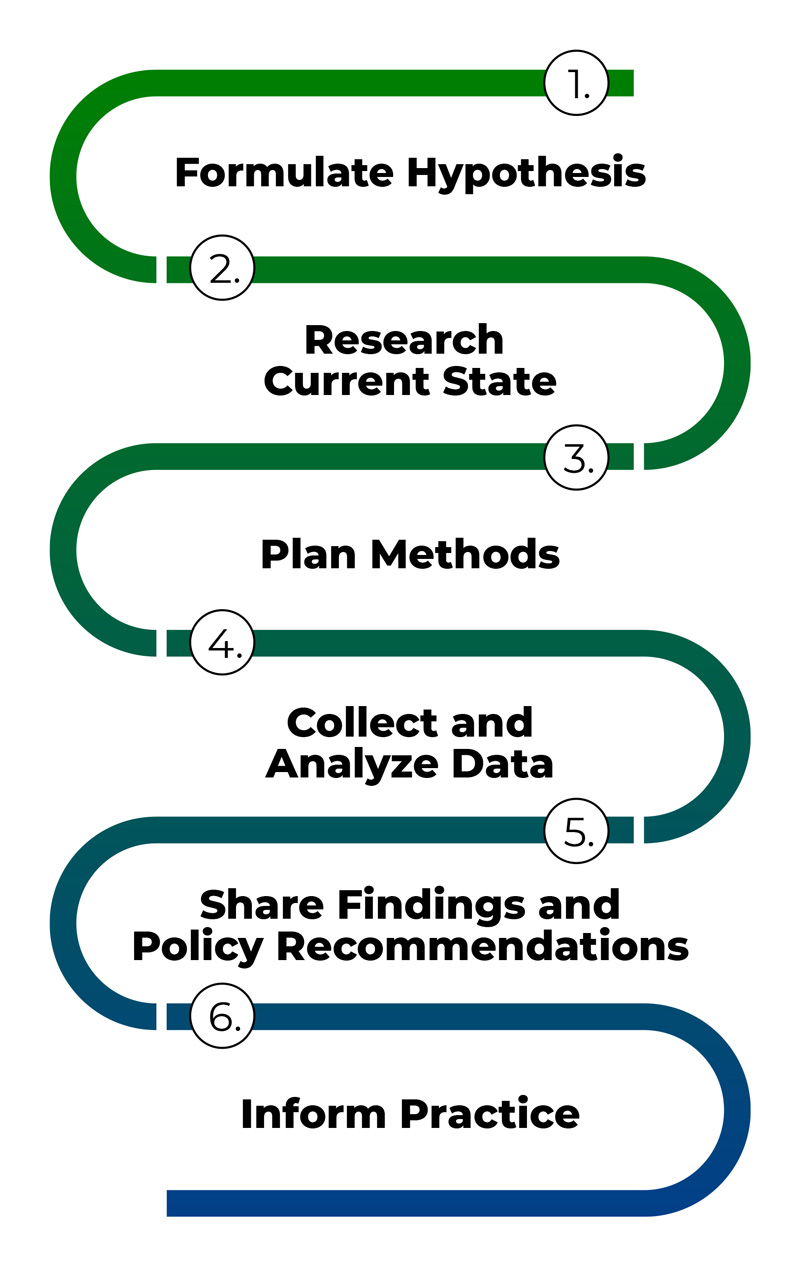

Our goal is to synthesize data and visualize findings for the intended audience so that reports are meaningful and actionable. We use an efficient, 6-step process with a robust research design that is respectful of our participants’ time and resources.

Research Design

We use data triangulation when conducting process and outcome evaluations. Each evaluation involves primary data collection (conducting observations and surveys), secondary data collection (using district and state student assessment data, and any other leading indicators of school effectiveness), in addition to qualitative research interviews from students, teachers, and administrators. All data is synthesized to estimate the impact and effectiveness of our services.

Each research design is created to answer the problem it aims to address. We always aim to have the highest-level standard; however, if an experimental design with random assignment is not feasible for the project, the next step would be to create a quasi-experimental design with a comparison group of like students in the district. Comparison groups are usually selected through propensity score matching and baseline equivalence is always investigated to ensure groups are equivalent. If there are no comparable groups available, then we will compare the school to itself over time by calculating learning rates by grade level. We also use correlational analysis to identify inputs for a more sophisticated analysis, or for understanding the relationship between variables.

Just like any project, there are certain tradeoffs that must be made in the applied research setting, our partner districts’ resources and time being the largest factors that impact how rigorous a study can be. However, regardless of these tradeoffs, we will always provide actionable results with appropriate caveats based off the methods and design. Every investigation that comes into the Applied Research Center will have actionable policy implications that are understandable to practitioners.

Common Research Questions

Measuring Student Learning:

- 1. What is the norm for academic growth nationally?

- 2. What is the academic growth in the district compared to national norms?

- 3. Are IE partner school students experiencing academic growth at a faster rate?

- 4. Is the rate statistically significant?

Method & Solution:

- Hedges’ g effect sizes for academic growth are determined from national norming studies and applied to the district context. They estimate the growth in average student achievement from one period to the next. This growth reflects both the effects of schooling plus other developmental influences on students during the year.

- Customized learning rate norms for the district are calculated and compared to national norms.

- IE partner school student learning rates are calculated and compared to district and national norms.

- Propensity score matching is used to create two equal groups and hierarchical linear modeling is implemented to account for the clustering of students within schools. Baseline equivalence is always investigated.

Measuring Subgroup Gaps

- 1. What are the subgroup gaps in the district?

- 2. Are IE partner school students closing gaps at a faster rate?

Method & Solution:

- Subgroup gap benchmarks are calculated by taking the differences between the mean scale score for each subgroup and counterpart divided by the pooled standard deviation for all students.

- Subgroup gaps are calculated for IE partner school students to assess change over time and to report gap closures or the widening of gaps.

The Power of Research-Validated Metrics

Project Accountability with Data Reporting

Our Applied Research Center provides regular data reports to our school and district partners. We hold ourselves accountable to the district’s shared goals and we utilize our research-validated metrics to ensure you are on track to achieve success.

Every month, our project leadership team meets with senior district leaders to report the progress of our work through an Executive Action Team process. We do this jointly with the school principals in our partnered schools.

“The Executive Action Team meetings give us the opportunity to look at sustainability, building capacity, and leveraging resources rather than quick fixes. The new tools that our leaders and teachers are learning with Instructional Empowerment have truly impacted the systems and quality of instruction in our buildings.”

Brandon White

Assistant Superintendent of Academics, South Bend Community School Corporation, IN

Validated Metrics That Show

Real-Time Progress

Our keystone metrics were developed by our Applied Research Center team and are exclusive to Instructional Empowerment. These research-validated, predictive metrics show the real-time improvement of instructional systems within schools.

District leaders use our scientific indicators to see real-time progress of the instructional systems of student growth and achievement. The Rigor Appraisal instrument is scientifically correlated with both benchmark and state assessment learning gains.

“What we’re seeing is that there is a direct correlation between the Rigor Appraisal score and what we see in terms of student achievement. That gives us confidence in the tool. It also allows us as a school district to be predictive in terms of what we expect to see with student outcomes and make mid-year corrections.”

Troy Knoderer

Chief Academic Officer, MSD Lawrence Township, IN

Hope Is Not a Reform Strategy – Evidence Is

District leaders use our Applied Research Center’s leading metrics to confidently predict student outcomes – without additional student testing. You will know if you are on track to achieve your goals – no more waiting and hoping.

Every step of our partnerships is guided by real-time predictive data. District leaders have absolute confidence in the project progress with transparent data sharing.

Peer Reviewed Research and Official Reports

Explore Our Services

*Many of Instructional Empowerment’s team members previously served under another company led by CEO and Executive Director of Research, Michael Toth, before Instructional Empowerment was founded. Some of the research featured on this website was conducted under the previous company’s Applied Research Center, or the data was collected there and then analyzed by Instructional Empowerment’s own Applied Research Center. Michael Toth and his team have perfected and applied an evidence-based school improvement approach to achieve the same performance results through their new home at Instructional Empowerment.